- Introduction

- Metrics

- Hash Table Lookup Process

- Standard Lookup Process

- Improvement Outline

- Locating Slots

- Enhancing Locality

- Reducing Comparisons

Introduction

I recently watched a YouTube video titled C++Now 2018: You Can Do Better than std::unordered_map: New Improvements to Hash Table Performance. The video presents incredible improvements in hash table performance. Here, I will summarize the main points on how to achieve these improvements, which can also be useful for other data structures.

Metrics

We will focus on the performance of lookup process.

Hash Table Lookup Process

The typical lookup process in a hash table involves:

- Calculating the hash value for the input key and determining which slot it belongs to.

- Checking all keys in that slot to see if any match the input key.

Standard Lookup Process

Let’s examine how C++’s std::unordered_map performs lookups:

- It uses the

hash()function to calculate the hash value for the input key and the modulo operator to determine the slot (hash % len(buckets)). - All keys that belong to the same slot are arranged in a linked list. After determining the slot, the linked list is traversed to check if the input key is present. If no key matches, it is an unsuccessful lookup.

Improvement Outline

We can improve the performance of hash tables in two main steps:

- Reduce the calculation required to locate a slot.

- Enhance the locality of keys in the same slot and reduce equality comparisons.

Locating Slots

A straightforward improvement is to replace the modulo operation with a bitwise AND operation. Instead of using hash % len(buckets), we use hash & (len(buckets) - 1). As long as len(buckets) is a power of 2, both operations yield the same result. However, hash & (len(buckets) - 1) is much faster due to the efficiency of bitwise operations.

Enhancing Locality

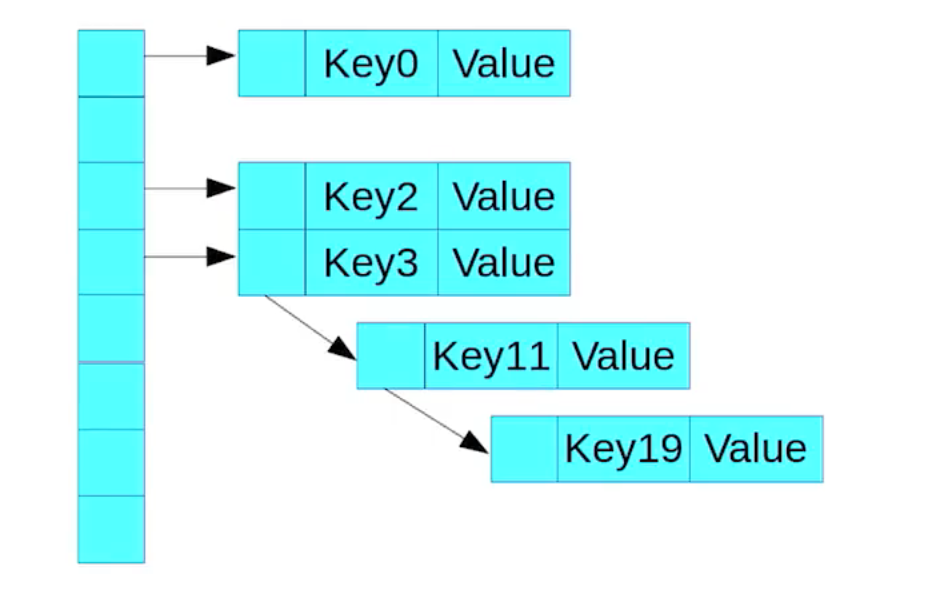

Before discussing how to enhance locality, let’s review how std::unordered_map organizes its memory.

The main issue is that std::unordered_map uses a linked list to handle hash collisions, which is not a memory-compact data structure.

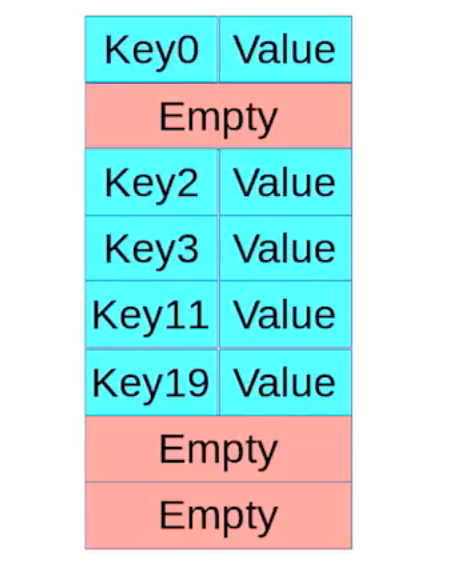

Google Dense Hash Map

One way to enhance locality is to store a key in the next available slot when there is a hash collision. This approach is used in Google’s dense hash map. The side effect is that we need to set an empty key that will never be used in the hash table. During the lookup process, we traverse through slots and need to know when to stop.

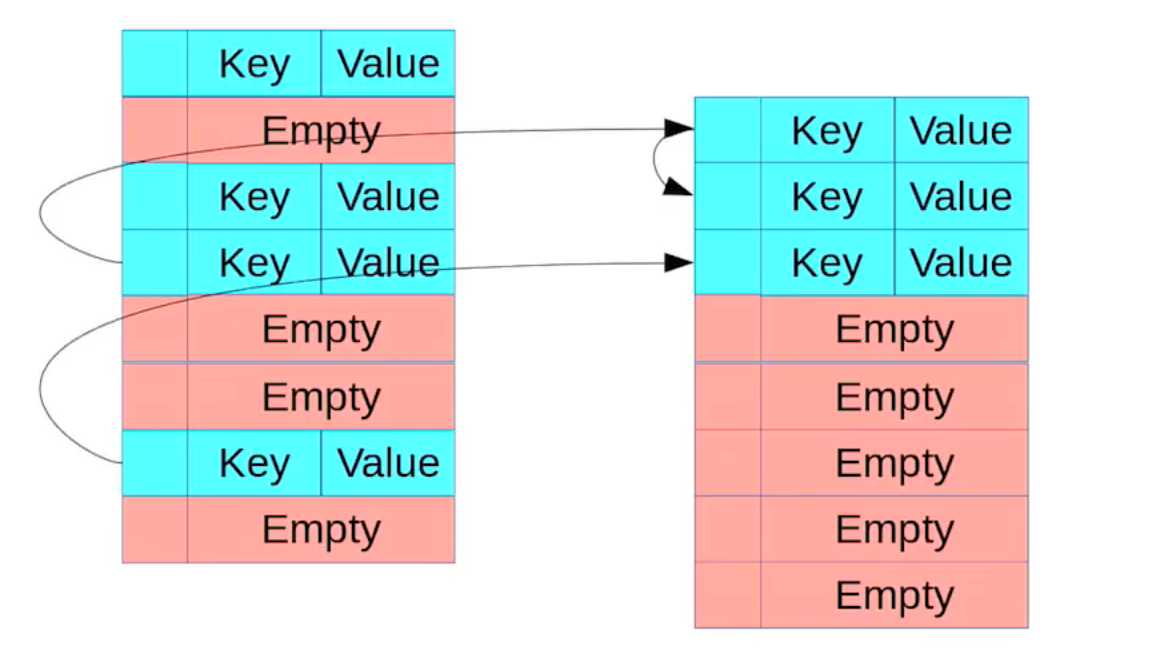

Ptr Map

Another approach is to improve the locality of the linked list by using an array to implement it. This hash map is called a ptr map.

Reducing Comparisons

When traversing through all possible keys, we have to run the equal_to() function for each key. We can use some data structures to reduce the number of comparisons.

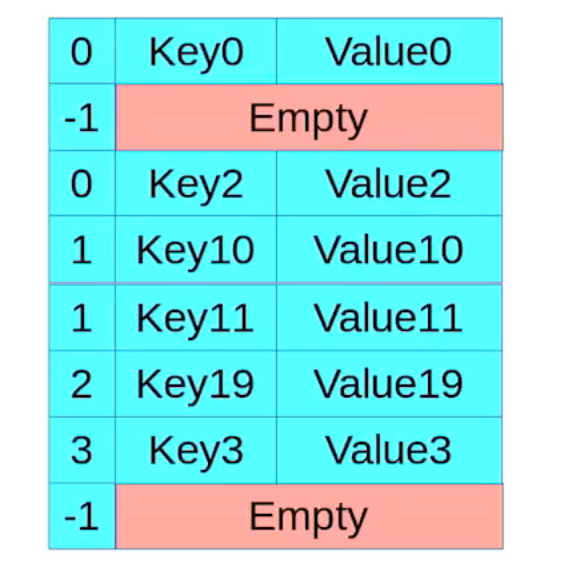

Robin Hood Hash Map

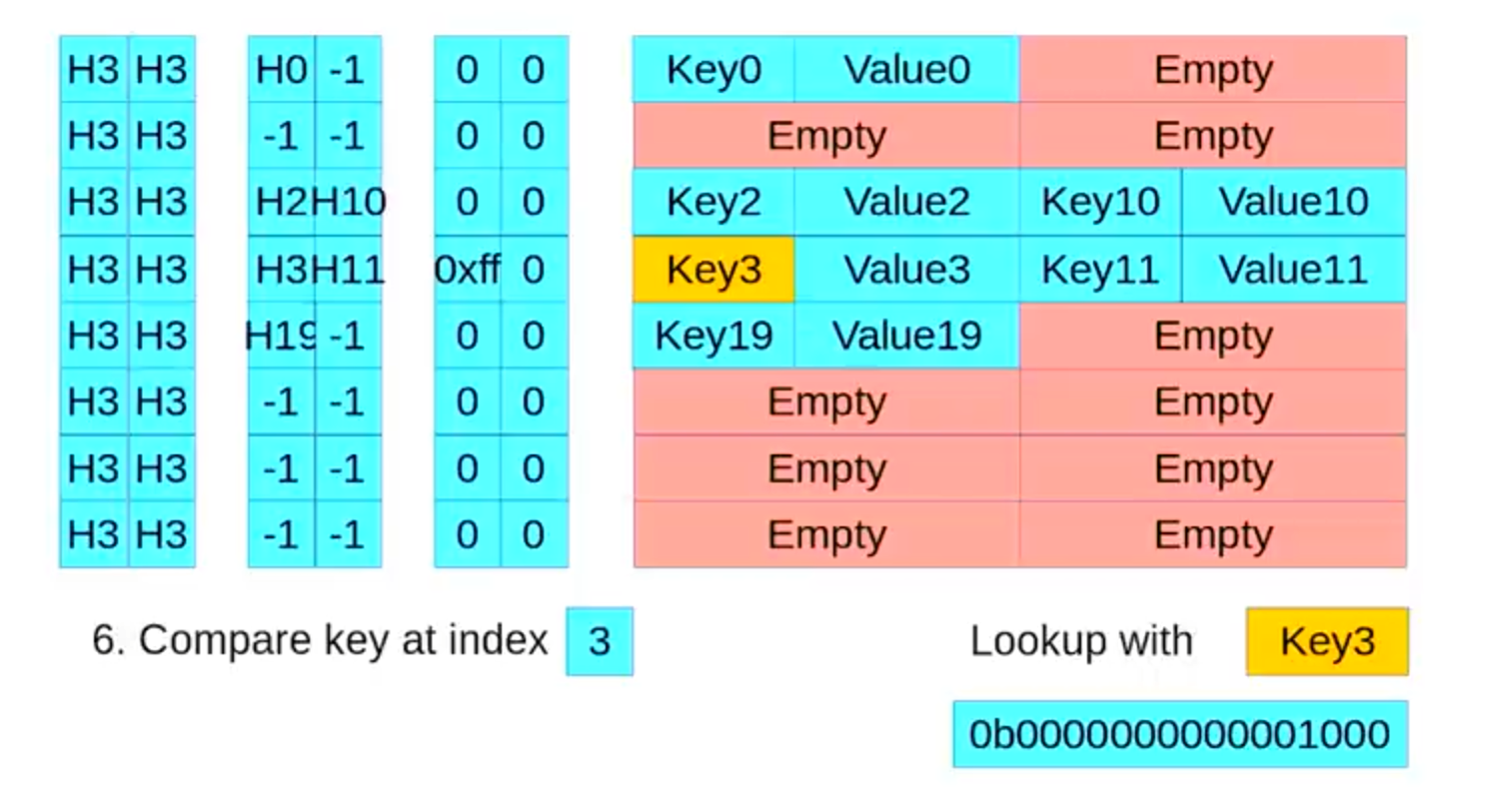

Based on Google’s dense hash map, we can add auxiliary data to identify which slot the key belongs to. For example:

In this example:

- Key2 and Key10 have the same hash value and belong to the same slot where Key2 is stored.

- Key11, Key9, and Key3 have the same hash value and belong to the same slot where Key10 is stored.

The advantage of this hash map is that before hitting an empty slot, we can skip keys that do not have the target hash value. This hash map is called a Robin Hood hash map.

Google Flat Hash Map

Another design is Google’s flat hash map, which uses a metadata structure. Each slot takes up one byte in the metadata: 1 bit indicates emptiness, and 7 bits indicate a hash value.

The advantage of Google’s flat hash map is that the metadata is well compacted, and it reduces comparisons by storing a 7-bit hash value, and it can use cpu 16 bytes operation to fast calcaulte.