Introduction

The map is a commonly used caching data structure in Go. If you perform an entire assignment to a map while concurrently reading from it, will there be concurrency issues? This article aims to explore this question.

Problem Statement

This issue was initially raised during a code review (CR). The simplified version of the source code is as follows:

|

|

Concurrent execution occurs as follows:

The Read method concurrently reads data from the cache. The Fresh method concurrently assigns new values to the entire cache. Given this scenario, could there be concurrency issues causing the program to run abnormally? With this question in mind, the author conducted a series of investigations, which are recorded below.

Analysis Process

What is Replaced During an Entire Map Replacement

In Go, a map is actually a pointer to an hmap type. The source code is listed below.

|

|

Therefore, a map is not an hmap structure itself but a pointer to an hmap structure. Assigning a value to the entire map is essentially assigning a value to the pointer. The next question is whether this kind of assignment is atomic.

Atomicity of Pointer Assignment

Assuming a 64-bit system, a map is effectively an 8-byte pointer. The author used a piece of test code to investigate the concurrency issues with map, as shown below.

|

|

Here, separate tests were conducted on a standalone map and a map within a struct field. One goroutine reads data from the map while another goroutine performs an entire map replacement. After multiple tests, no runtime exceptions were encountered. Therefore, it seems that map assignment is atomic.

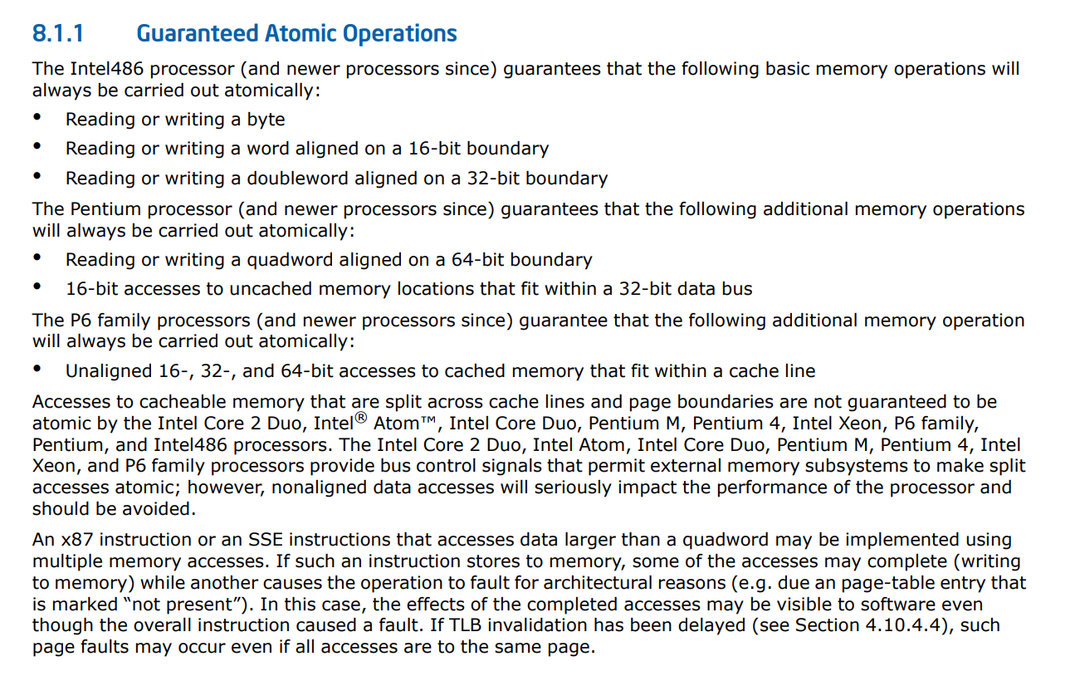

However, the author came across some discussions indicating that atomicity of assignment is not guaranteed absolutely at the hardware level. Here’s an excerpt from Intel’s documentation.

The documentation states that Intel P6 processors (released in 1995) and newer CPUs guarantee atomicity for reads and writes of 1, 2, 4, and 8 bytes within the same cache line. However, atomicity is not guaranteed for assignments that span cache lines. With this in mind, the author devised a test case with the following approach:

- Initialize a byte array of three cache lines in size. Note that on the author’s 64-bit system, a cache line is 64 bytes.

- Identify the boundary between cache lines within the byte array to obtain an address offset by 4 bytes.

- Initialize an 8-byte map (essentially a pointer) at this offset address, spanning across cache lines.

- Concurrently replace and read from the map to observe if any runtime exceptions occur.

|

|

In the above test case, the memory address located 4 bytes from the cache line boundary was identified. A map was initialized at this address, which, as mentioned earlier, is effectively an 8-byte pointer. Consequently, the map spanned across cache lines. The results is like below.

|

|

The results indicate that the program accessed the address 0xc14c322330, resulting in an error accessing an invalid address. This suggests that assigning an 8-byte pointer at an address spanning cache lines can indeed lead to non-atomicity and runtime anomalies.

To verify this hypothesis, I changed alignFromCacheLine to 8. This prevented the map from spanning cache lines, and upon testing, the error no longer occurred. This essentially confirms the hypothesis.

To more accurately identify scenarios where non-atomicity may cause issues, I replaced the map with uint64 and repeated the previous process. The code is as follows:

|

|

In the previous example, two concurrent operations were observed:

- Continuously assigning uint64 values 0x1122334455667788 or 0x9900112233445566.

- Reading the uint64 value and printing it if it does not match these two valid values.

Result is like below.

|

|

The results included values like 0x1122334433445566 and 0x9900112255667788, which are intermediate states where the bytes of the two valid values are swapped. Changing alignFromCacheLine to 8, thus preventing crossing cache lines, eliminated these intermediate states from being read. This confirms the findings from testing with maps — assigning across cache lines results in non-atomic assignments, allowing concurrent reads to access intermediate illegal data values, leading to the earlier observed errors of accessing invalid addresses.

Map Initialization Memory Location

According to the previous analysis, initializing a map at a memory location that crosses cache lines results in non-atomic map replacements. The earlier process used the unsafe package to forcefully initialize maps at such locations. Under normal circumstances, does map initialization naturally result in crossing cache lines?

At this point, Go’s memory alignment mechanism needs consideration. Go’s memory alignment coefficient can be obtained as follows: every variable must align according to its alignment coefficient, ensuring that the variable’s starting position is a multiple of its alignment coefficient.

|

|

Both maps and uint64 values are aligned on 8-byte boundaries, ensuring that they do not span across 64-bit cache lines. Therefore, they do not encounter the concurrency issues observed earlier.

The author conducted a simple test to verify the 8-byte alignment of maps:

|

|

The results did not reveal any maps that were not aligned on an 8-byte boundary, indicating that Go’s memory mechanism ensures maps do not span cache lines. In fact, Go’s memory alignment mechanism guarantees that all basic types do not span cache lines, while only structs and arrays have the potential to span cache lines. For further exploration, interested individuals can refer to the Go official documentation.

Conclusion

Without using the unsafe package (which is not recommended in business logic), concurrent operations of entire map replacement and reading using maps do not pose concurrency issues. However, if maps are forcefully cast using unsafe operations, there is no guarantee that map addresses comply with Go’s memory alignment mechanism, potentially leading to concurrency issues.

However, these conclusions are based solely on testing conducted on the 64-bit Intel platform. Further testing on other hardware platforms is necessary to confirm these findings.