TLDR:

1. Install Legado app

2. Run server and go to webui or go to public test webui

3. Choose model and generate subscribe URL

4. Import into Legado

5. Enjoy your book listening

Introduction

This blog introduces how I designed and implemented TTS Model Server (GitHub), an API-based server that provides unified access to multiple Text-to-Speech (TTS) engines.

Why Build This?

I wanted a high-quality English text-to-speech (TTS) solution to listen to books on my Android phone. However, I couldn't find a free app that provided both flexibility and high-quality TTS output.

After exploring various alternatives, I decided to build a TTS model server myself and integrate it with the open-source Legado app to enable seamless book listening.

Features

Since there are many open-source TTS models, I realized I could build a unified TTS model server that:

✅ Supports multiple TTS models effortlessly

✅ Provides a single API for all models

✅ Allows dynamic frontend configuration based on model-specific parameters

To achieve this, I designed the system with the following components:

- Configurable Frontend – The frontend dynamically generates forms based on model-specific parameters received from the backend.

- Backend Model Abstraction – Each TTS model follows a standard interface, defining its name, required fields, and logic for speech generation.

- Common Server Layer – The API server processes requests, validates inputs, and forwards them to the appropriate TTS model.

Implementation Details

1. Configurable Frontend

Instead of hardcoding form fields in the UI, the backend dynamically provides the required fields for each TTS model.

To manage dynamic forms, I designed the following TypeScript interfaces:

Typescript |

interface Option { value: string; relatedFields?: Field[]; } interface Field { name: string; defaultValue: string; options: Option[]; desc: string; } |

Each option can have related fields that need to be displayed when selected. To manage dependencies between fields, I introduced the following wrapper structure:

Typescript |

interface Wrapper { field: Field; children: Wrapper[]; } |

All fields are wrapped into a hierarchical structure, maintaining parent-child relationships.

When the user selects an option, the system removes unrelated child fields using a Depth-First Search (DFS) algorithm. Then, new fields are added dynamically based on the selected option.

This approach allows the frontend to be fully dynamic, adapting to different TTS models effortlessly.

2. Abstracting the TTS Model Interface

Each TTS model follows a standard Go interface:

Golang |

type Model interface { Name() string Fields() []*Field TTS(ctx context.Context, text string, args map[string]string) ([]byte, string, error) } |

- The Name() function returns the model name.

- The Fields() function returns the list of required parameters for this model.

- The TTS() function takes input text and parameters, then returns the generated speech.

Since all TTS models implement the same interface, new models can be added without modifying the core server logic.

The frontend dynamically renders only the required fields for each model, and when a request is made, the backend simply forwards the parameters to the selected TTS model.

3. Common Server Layer

The Gin-based API server acts as a single entry point for all TTS models. It handles:

✅ Request parsing – Extracts model parameters and user input

✅ Token validation – Ensures authentication for API usage

✅ Routing – Directs the request to the appropriate TTS model

Supported TTS Models

1. Edge Model

Microsoft Edge provides a read-aloud API that requires a WebSocket connection. To optimize performance, I implemented a WebSocket pool to reuse existing connections instead of creating a new one for every request.

2. Coqui-ai/TTS

Coqui TTS is an open-source speech synthesis model.

The Setup Process is as follows:

- Install Conda

- Install Coqui-TTS via pip

- Run the model using a Python subprocess in Go

About the performance, In my testing:

- Without a GPU: Generating 5 seconds of speech takes 20 seconds, making it unsuitable for real-time applications.

- With a GPU: The process is significantly faster and practical for real-world use.

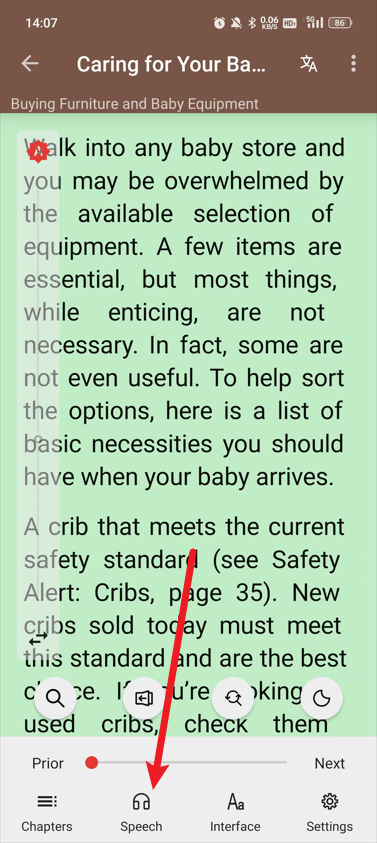

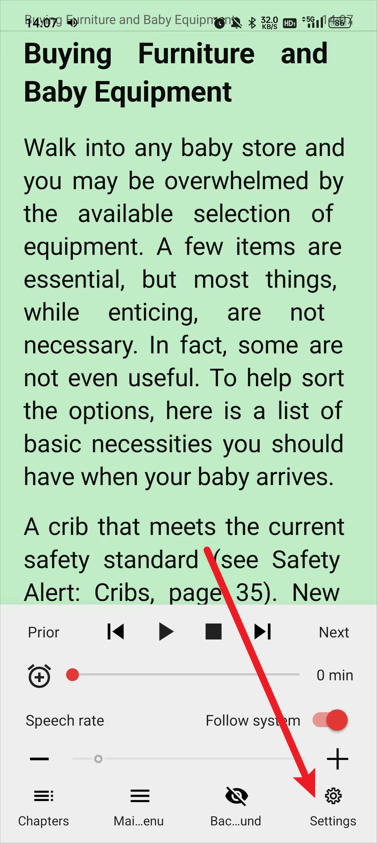

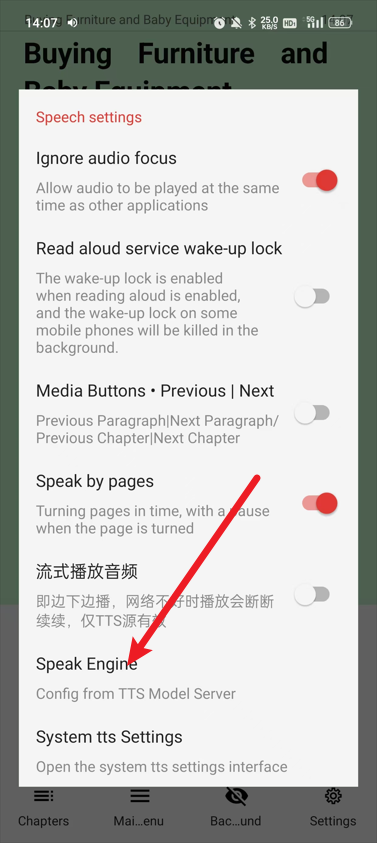

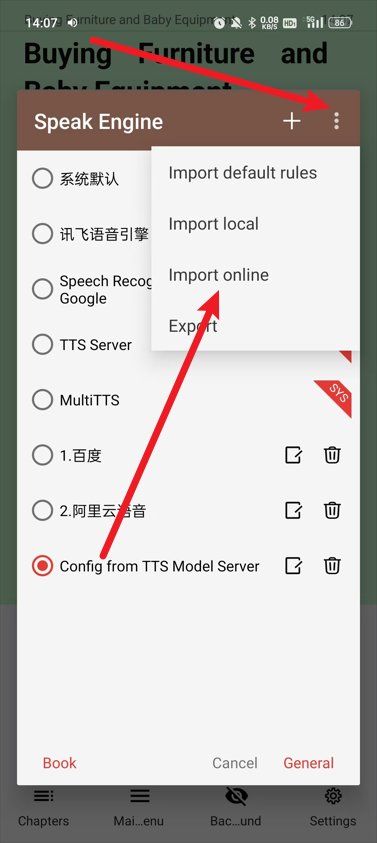

Integration with Legado

Legado is an open-source reading app that allows custom TTS engine integration.

- Open the TTS Model Server Web UI

- Choose a TTS model

- Generate a subscription URL

- Import the URL in Legado's TTS settings as follows

Now, Legado can use TTS Model Server as its speech engine!

Future Improvements

✅ Caching Mechanism – Avoid redundant processing for repeated text inputs

✅ More TTS Models – Add support for ElevenLabs, OpenAI TTS, and others

✅ WebSocket Streaming – Enable real-time speech generation

Conclusion

TTS Model Server provides a unified, extensible, and dynamic way to access multiple TTS models. By abstracting the backend model interface and using a configurable frontend, it enables seamless integration with different engines, including Legado.